Performance Testing With K6

Learn about how to use Grafana's K6 to do various Performance Testing

Table of Contents

Introduction to Performance Testing

Performance testing is a type of software testing that assesses how a system performs in terms of responsiveness, stability, and scalability under various workloads.

Here’s a breakdown of key aspects of performance testing:

Goals of Performance Testing

- Determine responsiveness: Measure how quickly the application responds to user requests.

- Evaluate stability: Assess the application’s ability to remain stable and avoid crashes under normal and peak loads.

- Test scalability: Determine if the application can handle increasing user loads and data volumes without performance degradation.

- Identify bottlenecks: Pinpoint areas in the system that are causing performance issues.

- Meet performance requirements: Verify that the application meets predefined performance criteria.

Types of Performance Testing

- Load testing: Simulates expected user traffic to evaluate the application’s performance under normal conditions.

- Stress testing: Subjects the application to extreme loads to identify its breaking point and assess its behavior under stress.

- Endurance (Soak) testing: Evaluates the application’s performance over an extended period to uncover issues like memory leaks or resource exhaustion.

- Spike testing: Tests the application’s reaction to sudden spikes in user traffic.

- Scalability testing: Determines the application’s ability to handle increasing loads and data volumes.

Key Metrics in Performance Testing

- Response time: The time it takes for the application to respond to a user request.

- Throughput: The number of transactions or requests the application can handle within a given time frame.

- Resource utilization: The amount of CPU, memory, and network bandwidth the application consumes.

- Error rate: The number of errors encountered during testing.

For this blog, we will only focus on doing the tests. The metrics should ideally be read from the integrated observability tools, like Datadog, etc.

Introduction to K6

Grafana k6 is an open-source, developer-friendly, and extensible load testing tool. While you write k6 scripts in JavaScript, under the hood k6 is actually written in Go.

It uses Goja, which is a pure Golang implementation of ES2015 (ES6) JavaScript. This means that k6 can execute JavaScript code, but it doesn’t run on a Node.js engine. Instead, it uses a Go JavaScript compiler.

This combination of Go for the core engine and JavaScript for scripting provides k6 with several advantages:

- Performance: Go is known for its efficiency and concurrency, making k6 capable of handling a large number of virtual users with minimal resource consumption.

- Developer-friendliness: JavaScript is a widely used language, making it easy for developers to write and understand k6 scripts.

So, in essence, k6 leverages the power of Go for its performance and the familiarity of JavaScript for its scripting.

Installing K6

brew install k6 # Macdocker pull grafana/k6 # Dockerdocker pull grafana/k6:master-with-browser # Docker with browserOfficial Setup Documents

Your First Script

import http from 'k6/http'

// Read the reference doc for full optionsexport const options = { discardResponseBodies: true, // Discard, if you are not doing any checks with response}

// The default exported function is gonna be picked up by k6 as the entry point for the test script.// It will be executed repeatedly in "iterations" for the whole duration of the test.export default function () { // Make a GET request to the target URL // In actual code, this should rotate between long list of actual users http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop): * default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

data_received..................: 16 kB 17 kB/s data_sent......................: 450 B 486 B/s http_req_blocked...............: avg=619.67ms min=619.67ms med=619.67ms max=619.67ms p(90)=619.67ms p(95)=619.67ms http_req_connecting............: avg=275.78ms min=275.78ms med=275.78ms max=275.78ms p(90)=275.78ms p(95)=275.78ms http_req_duration..............: avg=305.46ms min=305.46ms med=305.46ms max=305.46ms p(90)=305.46ms p(95)=305.46ms { expected_response:true }...: avg=305.46ms min=305.46ms med=305.46ms max=305.46ms p(90)=305.46ms p(95)=305.46ms http_req_failed................: 0.00% 0 out of 1 http_req_receiving.............: avg=76µs min=76µs med=76µs max=76µs p(90)=76µs p(95)=76µs http_req_sending...............: avg=106µs min=106µs med=106µs max=106µs p(90)=106µs p(95)=106µs http_req_tls_handshaking.......: avg=315.15ms min=315.15ms med=315.15ms max=315.15ms p(90)=315.15ms p(95)=315.15ms http_req_waiting...............: avg=305.28ms min=305.28ms med=305.28ms max=305.28ms p(90)=305.28ms p(95)=305.28ms http_reqs......................: 1 1.079472/s iteration_duration.............: avg=926.11ms min=926.11ms med=926.11ms max=926.11ms p(90)=926.11ms p(95)=926.11ms iterations.....................: 1 1.079472/s

running (00m00.9s), 0/1 VUs, 1 complete and 0 interrupted iterationsRunning it for N Iterations

- Run the test for a fixed number of iterations.

- This is useful when you want to run a test for a specific number of times.

import http from 'k6/http'

export const options = { discardResponseBodies: true, iterations: 10, // Run this 10 times}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop): * default: 10 iterations shared among 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

data_received..................: 119 kB 18 kB/s data_sent......................: 1.4 kB 204 B/s http_req_blocked...............: avg=53.04ms min=2µs med=4µs max=530.45ms p(90)=53.05ms p(95)=291.75ms http_req_connecting............: avg=22.91ms min=0s med=0s max=229.15ms p(90)=22.91ms p(95)=126.03ms http_req_duration..............: avg=618.37ms min=459.38ms med=614.33ms max=865.14ms p(90)=641.76ms p(95)=753.45ms { expected_response:true }...: avg=618.37ms min=459.38ms med=614.33ms max=865.14ms p(90)=641.76ms p(95)=753.45ms http_req_failed................: 0.00% 0 out of 10 http_req_receiving.............: avg=30.88ms min=41µs med=57µs max=308.31ms p(90)=30.94ms p(95)=169.62ms http_req_sending...............: avg=57.7µs min=10µs med=15µs max=447µs p(90)=59.1µs p(95)=253.04µs http_req_tls_handshaking.......: avg=27.38ms min=0s med=0s max=273.89ms p(90)=27.38ms p(95)=150.64ms http_req_waiting...............: avg=587.43ms min=308.61ms med=613.27ms max=865.08ms p(90)=641.37ms p(95)=753.22ms http_reqs......................: 10 1.488825/s iteration_duration.............: avg=671.62ms min=459.46ms med=614.85ms max=1.13s p(90)=892.54ms p(95)=1.01s iterations.....................: 10 1.488825/s vus............................: 1 min=1 max=1 vus_max........................: 1 min=1 max=1

running (00m06.7s), 0/1 VUs, 10 complete and 0 interrupted iterationsdefault ✓ [======================================] 1 VUs 00m06.7s/10m0s 10/10 shared itersConstant Users

- Run a test with a constant number of Virtual Users (VUs).

- This is useful when you want to maintain a constant load on the system. (Constant Request that the system handles at a time)

import http from 'k6/http'

export const options = { discardResponseBodies: true, VUs: 5, duration: '10s',}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 5 max VUs, 40s max duration (incl. graceful stop): * default: 5 looping VUs for 10s (gracefulStop: 30s)

data_received..................: 1.6 MB 157 kB/s data_sent......................: 16 kB 1.6 kB/s http_req_blocked...............: avg=18.69ms min=1µs med=4µs max=729.92ms p(90)=6.1µs p(95)=10.04µs http_req_connecting............: avg=7.98ms min=0s med=0s max=235.23ms p(90)=0s p(95)=0s http_req_duration..............: avg=344.09ms min=211.83ms med=236.61ms max=1.76s p(90)=510.04ms p(95)=668.69ms { expected_response:true }...: avg=344.09ms min=211.83ms med=236.61ms max=1.76s p(90)=510.04ms p(95)=668.69ms http_req_failed................: 0.00% 0 out of 140 http_req_receiving.............: avg=4.89ms min=17µs med=45µs max=232.95ms p(90)=71.09µs p(95)=104.54µs http_req_sending...............: avg=14.32µs min=7µs med=13µs max=57µs p(90)=19.1µs p(95)=24µs http_req_tls_handshaking.......: avg=9.77ms min=0s med=0s max=468.48ms p(90)=0s p(95)=0s http_req_waiting...............: avg=339.18ms min=211.78ms med=236.53ms max=1.76s p(90)=483.51ms p(95)=668.64ms http_reqs......................: 140 13.56748/s iteration_duration.............: avg=362.91ms min=212.13ms med=236.77ms max=1.76s p(90)=642.78ms p(95)=703.69ms iterations.....................: 140 13.56748/s vus............................: 5 min=5 max=5 vus_max........................: 5 min=5 max=5

running (10.3s), 0/5 VUs, 140 complete and 0 interrupted iterationsdefault ✓ [======================================] 5 VUs 10sRamping Users

import http from 'k6/http'

export const options = { discardResponseBodies: true, stages: [ { duration: '5s', target: 10 }, // traffic ramp-up from 1 to 10 users over 5 sec { duration: '10s', target: 10 }, // stay at 100 users for 10 minutes ],}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 10 max VUs, 45s max duration (incl. graceful stop): * default: Up to 10 looping VUs for 15s over 2 stages (gracefulRampDown: 30s, gracefulStop: 30s)

data_received..................: 4.2 MB 271 kB/s data_sent......................: 41 kB 2.6 kB/s http_req_blocked...............: avg=13.72ms min=0s med=5µs max=758.52ms p(90)=12µs p(95)=19µs http_req_connecting............: avg=5.99ms min=0s med=0s max=233.73ms p(90)=0s p(95)=0s http_req_duration..............: avg=333.16ms min=210.86ms med=233.8ms max=1.27s p(90)=481.08ms p(95)=681.9ms { expected_response:true }...: avg=333.16ms min=210.86ms med=233.8ms max=1.27s p(90)=481.08ms p(95)=681.9ms http_req_failed................: 0.00% 0 out of 364 http_req_receiving.............: avg=8.09ms min=8µs med=69µs max=419.52ms p(90)=157.7µs p(95)=416.34µs http_req_sending...............: avg=26.63µs min=2µs med=18.5µs max=306µs p(90)=44µs p(95)=61.94µs http_req_tls_handshaking.......: avg=7.71ms min=0s med=0s max=527.52ms p(90)=0s p(95)=0s http_req_waiting...............: avg=325.03ms min=210.66ms med=229.16ms max=1.27s p(90)=471.68ms p(95)=639.4ms http_reqs......................: 364 23.419803/s iteration_duration.............: avg=347.02ms min=211.02ms med=234.82ms max=1.27s p(90)=495.74ms p(95)=692.85ms iterations.....................: 364 23.419803/s vus............................: 10 min=2 max=10 vus_max........................: 10 min=10 max=10

running (15.5s), 00/10 VUs, 364 complete and 0 interrupted iterationsdefault ✓ [======================================] 00/10 VUs 15sConstant Rate

- Run at a constant RPS (Requests Per Second).

- This is useful when you want to maintain a constant load on the system. (in terms of incoming requests)

- This can be used when doing “Stress” tests.

How is it different from Constant Users?

- In

Constant Users, the number of VUs is constant, but the rate of requests can vary based on the response time. - In

Constant Rate, the rate of requests is constant, but the number of VUs can vary based on the response time.

import http from 'k6/http'

export const options = { discardResponseBodies: true, scenarios: { constant_request_rate: { executor: 'constant-arrival-rate', rate: 10, // This should be the target RPS you want to achieve timeUnit: '1s', duration: '30s', // It could be '30m', '2h' etc preAllocatedVUs: 5, // No. of users to start with maxVUs: 20, // Maximum number of Virtual Users }, },}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 20 max VUs, 1m0s max duration (incl. graceful stop): * constant_request_rate: 10.00 iterations/s for 30s (maxVUs: 5-20, gracefulStop: 30s)

data_received..................: 1.3 MB 44 kB/s data_sent......................: 33 kB 1.1 kB/s dropped_iterations.............: 2 0.064737/s http_req_blocked...............: avg=10.38ms min=4µs med=6µs max=469.12ms p(90)=9µs p(95)=25µs http_req_connecting............: avg=5.06ms min=0s med=0s max=227.5ms p(90)=0s p(95)=0s http_req_duration..............: avg=292.48ms min=207.89ms med=217.88ms max=1.55s p(90)=510.21ms p(95)=681.26ms { expected_response:true }...: avg=407.55ms min=212.26ms med=234.13ms max=1.55s p(90)=702.11ms p(95)=907.9ms http_req_failed................: 63.54% 190 out of 299 http_req_receiving.............: avg=2.91ms min=21µs med=50µs max=639.2ms p(90)=80µs p(95)=109µs http_req_sending...............: avg=25.95µs min=14µs med=17µs max=416µs p(90)=37.2µs p(95)=54.09µs http_req_tls_handshaking.......: avg=5.22ms min=0s med=0s max=232.74ms p(90)=0s p(95)=0s http_req_waiting...............: avg=289.54ms min=207.74ms med=217.82ms max=1.55s p(90)=507.37ms p(95)=675.58ms http_reqs......................: 299 9.678148/s iteration_duration.............: avg=303.01ms min=208.04ms med=218.61ms max=1.55s p(90)=640.74ms p(95)=690.02ms iterations.....................: 299 9.678148/s vus............................: 4 min=2 max=5 vus_max........................: 7 min=6 max=7

running (0m30.9s), 00/07 VUs, 299 complete and 0 interrupted iterationsconstant_request_rate ✓ [======================================] 00/07 VUs 30s 10.00 iters/s scenarios: (100.00%) 1 scenario, 10 max VUs, 1m0s max duration (incl. graceful stop): * constant_request_rate: 10.00 iterations/s for 30s (maxVUs: 2-10, gracefulStop: 30s)

WARN[0009] Insufficient VUs, reached 10 active VUs and cannot initialize more executor=constant-arrival-rate scenario=constant_request_rate

data_received..............: 120 kB 3.6 kB/s data_sent..................: 25 kB 740 B/s dropped_iterations.........: 93 2.786794/s http_req_blocked...........: avg=22.83ms min=3µs med=6µs max=764.02ms p(90)=32.3µs p(95)=304.94µs http_req_connecting........: avg=10.48ms min=0s med=0s max=234.28ms p(90)=0s p(95)=0s http_req_duration..........: avg=1.15s min=210.02ms med=999.94ms max=3.67s p(90)=2.38s p(95)=2.95s http_req_failed............: 100.00% 208 out of 208 http_req_receiving.........: avg=108.88µs min=19µs med=52µs max=9.29ms p(90)=107.4µs p(95)=151.94µs http_req_sending...........: avg=34.41µs min=10µs med=18µs max=553µs p(90)=56.6µs p(95)=94.54µs http_req_tls_handshaking...: avg=12.17ms min=0s med=0s max=529.58ms p(90)=0s p(95)=0s http_req_waiting...........: avg=1.15s min=209.97ms med=999.82ms max=3.67s p(90)=2.38s p(95)=2.95s http_reqs..................: 208 6.232829/s iteration_duration.........: avg=1.18s min=210.16ms med=1.03s max=3.67s p(90)=2.38s p(95)=2.95s iterations.................: 208 6.232829/s vus........................: 1 min=1 max=10 vus_max....................: 10 min=5 max=10

running (0m33.4s), 00/10 VUs, 208 complete and 0 interrupted iterationsconstant_request_rate ✓ [======================================] 00/10 VUs 30s 10.00 iters/sRamping Rate

- Start with a small number of VUs and gradually increase them over time.

- This is useful for simulating a gradual increase in user traffic to test how the system handles the load.

- This can be used when doing “Scaling” tests.

- This is also helpful, when you want to get metrics like latency for different loads

import http from 'k6/http'

export const options = { discardResponseBodies: true, scenarios: { ramping_arrival_rate: { executor: 'ramping-arrival-rate', startRate: 1, // Initial RPS timeUnit: '1s', preAllocatedVUs: 5, maxVUs: 20, stages: [ { duration: '5s', target: 5 }, // ramp-up to 5 RPS { duration: '10s', target: 5 }, // constant load at 5 RPS { duration: '5s', target: 10 }, // ramp-up to 10 RPS { duration: '10s', target: 10 }, // constant load at 10 RPS { duration: '5s', target: 15 }, // ramp-up to 15 RPS { duration: '10s', target: 15 }, // constant load at 15 RPS ], }, },}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 1 scenario, 20 max VUs, 1m15s max duration (incl. graceful stop): * ramping_arrival_rate: Up to 15.00 iterations/s for 45s over 6 stages (maxVUs: 5-20, gracefulStop: 30s)

data_received..................: 4.7 MB 104 kB/s data_sent......................: 46 kB 1.0 kB/s dropped_iterations.............: 8 0.176378/s http_req_blocked...............: avg=16.95ms min=2µs med=5µs max=1.24s p(90)=13.4µs p(95)=28µs http_req_connecting............: avg=7.12ms min=0s med=0s max=283.08ms p(90)=0s p(95)=0s http_req_duration..............: avg=457.71ms min=207.27ms med=409.92ms max=3.16s p(90)=683.39ms p(95)=1.11s { expected_response:true }...: avg=457.71ms min=207.27ms med=409.92ms max=3.16s p(90)=683.39ms p(95)=1.11s http_req_failed................: 0.00% 0 out of 407 http_req_receiving.............: avg=14.64ms min=21µs med=50µs max=682.75ms p(90)=109.2µs p(95)=204.14ms http_req_sending...............: avg=24.52µs min=9µs med=17µs max=776µs p(90)=37.4µs p(95)=51.69µs http_req_tls_handshaking.......: avg=9.74ms min=0s med=0s max=1.04s p(90)=0s p(95)=0s http_req_waiting...............: avg=443.04ms min=207.2ms med=247.73ms max=3.04s p(90)=678.81ms p(95)=1.11s http_reqs......................: 407 8.973254/s iteration_duration.............: avg=474.8ms min=207.44ms med=412.32ms max=3.16s p(90)=699.15ms p(95)=1.41s iterations.....................: 407 8.973254/s vus............................: 4 min=1 max=11 vus_max........................: 13 min=5 max=13

running (0m45.4s), 00/13 VUs, 407 complete and 0 interrupted iterationsramping_arrival_rate ✓ [======================================] 00/13 VUs 45s 15.00 iters/sMultiple Scenarios

Run more than one scenario in a single test.

- Both scenarios runs in parallel.

- Each scenario has its own VUs and iterations.

import http from 'k6/http'

export const options = { discardResponseBodies: true, scenarios: { ramping_arrival_rate: { executor: 'ramping-arrival-rate', startRate: 1, // Initial RPS timeUnit: '1s', preAllocatedVUs: 5, maxVUs: 20, stages: [ { duration: '5s', target: 5 }, // ramp-up to 5 RPS { duration: '10s', target: 5 }, // constant load at 5 RPS ], }, constant_request_rate: { executor: 'constant-arrival-rate', rate: 10, // This should be the target RPS you want to achieve timeUnit: '1s', duration: '30s', // It could be '30m', '2h' etc preAllocatedVUs: 5, // No. of users to start with maxVUs: 20, // Maximum number of Virtual Users }, },}

export default function () { http.get('https://test-api.k6.io')} scenarios: (100.00%) 2 scenarios, 40 max VUs, 1m0s max duration (incl. graceful stop): * constant_request_rate: 10.00 iterations/s for 30s (maxVUs: 5-20, gracefulStop: 30s) * ramping_arrival_rate: Up to 5.00 iterations/s for 15s over 2 stages (maxVUs: 5-20, gracefulStop: 30s)

data_received..................: 4.2 MB 136 kB/s data_sent......................: 42 kB 1.4 kB/s dropped_iterations.............: 4 0.130029/s http_req_blocked...............: avg=21.72ms min=2µs med=5µs max=943.03ms p(90)=17µs p(95)=53.94µs http_req_connecting............: avg=8.54ms min=0s med=0s max=235.63ms p(90)=0s p(95)=0s http_req_duration..............: avg=474.72ms min=207.31ms med=437.69ms max=1.79s p(90)=762.19ms p(95)=1.01s { expected_response:true }...: avg=474.72ms min=207.31ms med=437.69ms max=1.79s p(90)=762.19ms p(95)=1.01s http_req_failed................: 0.00% 0 out of 360 http_req_receiving.............: avg=16.03ms min=15µs med=51µs max=775.55ms p(90)=118.8µs p(95)=208.08ms http_req_sending...............: avg=28.76µs min=6µs med=18µs max=339µs p(90)=50µs p(95)=101.04µs http_req_tls_handshaking.......: avg=13.07ms min=0s med=0s max=707.33ms p(90)=0s p(95)=0s http_req_waiting...............: avg=458.66ms min=207.25ms med=426.26ms max=1.79s p(90)=736.89ms p(95)=964.56ms http_reqs......................: 360 11.702628/s iteration_duration.............: avg=496.59ms min=207.45ms med=440.84ms max=1.79s p(90)=878.98ms p(95)=1.12s iterations.....................: 360 11.702628/s vus............................: 6 min=3 max=10 vus_max........................: 14 min=12 max=14

running (0m30.8s), 00/14 VUs, 360 complete and 0 interrupted iterationsconstant_request_rate ✓ [======================================] 00/09 VUs 30s 10.00 iters/sramping_arrival_rate ✓ [======================================] 00/05 VUs 15s 5.00 iters/sPerformance Scenarios

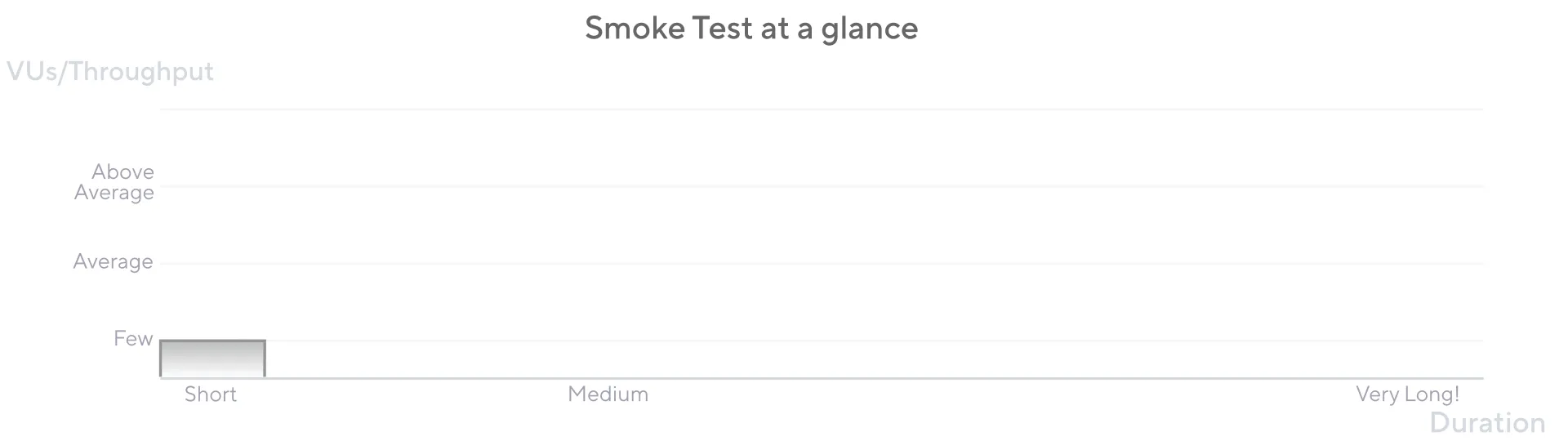

Smoke Testing

Smoke tests have a minimal load. Run them to verify that the system works well under minimal load and to gather baseline performance values.

import http from 'k6/http'import { check, sleep } from 'k6'

export const options = { vus: 3, // Key for Smoke test. Keep it at 2, 3, max 5 VUs duration: '1m', // This can be shorter or just a few iterations}

export default () => { const urlRes = http.get('https://test-api.k6.io') sleep(1) // MORE STEPS // Here you can have more steps or complex script // Step1 // Step2 // etc.}Average Load Testing

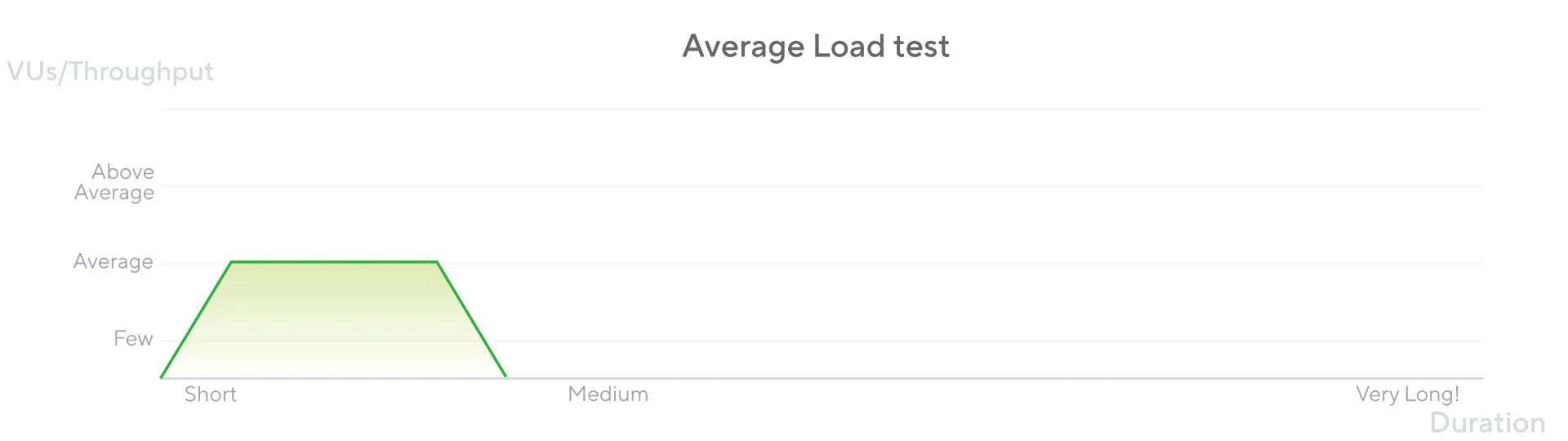

An average-load test assesses how the system performs under typical load. Typical load might be a regular day in production or an average moment.

- This is generally achieved through a “ramp-up Users” scenario.

- A ramp-up rate scenario can also work.

- Sometimes, a constant rate scenario can also be used based on the Average RPS.

import http from 'k6/http'import { sleep } from 'k6'

export const options = { // Key configurations for avg load test in this section stages: [ { duration: '5m', target: 100 }, // traffic ramp-up from 1 to 100 users over 5 minutes. { duration: '30m', target: 100 }, // stay at 100 users for 30 minutes { duration: '5m', target: 0 }, // ramp-down to 0 users ],}

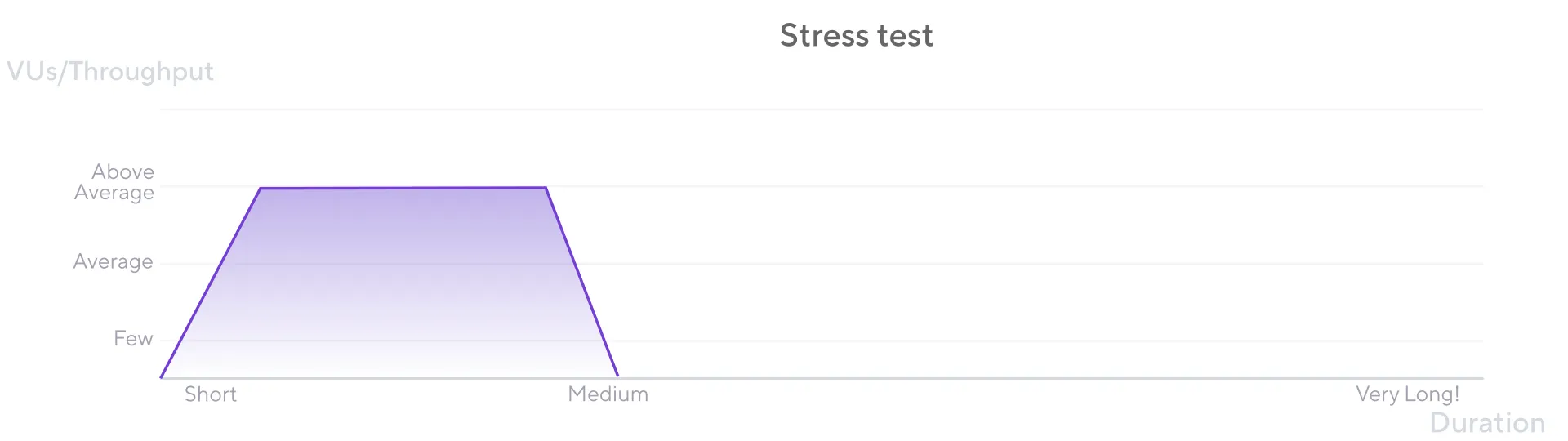

export default () => { const urlRes = http.get('https://test-api.k6.io') sleep(1) // MORE STEPS // Here you can have more steps or complex script // Step1 // Step2 // etc.}Stress testing

Stress testing assesses how the system performs when loads are heavier than usual.

import http from 'k6/http'import { sleep } from 'k6'

export const options = { // Key configurations for Stress in this section stages: [ { duration: '10m', target: 200 }, // traffic ramp-up from 1 to a higher 200 users over 10 minutes. { duration: '30m', target: 200 }, // stay at higher 200 users for 30 minutes { duration: '5m', target: 0 }, // ramp-down to 0 users ],}

export default () => { const urlRes = http.get('https://test-api.k6.io') sleep(1) // MORE STEPS // Here you can have more steps or complex script // Step1 // Step2 // etc.}Soak Testing

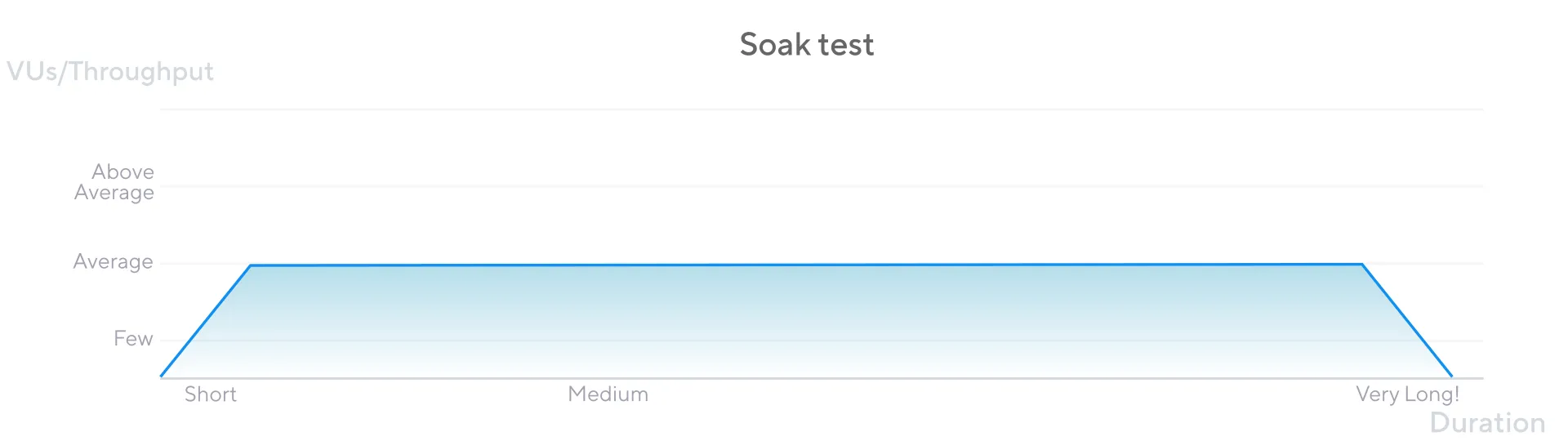

Soak testing is another variation of the Average-Load test. It focuses on extended periods, analyzing the following:

- The system’s degradation of performance and resource consumption over extended periods.

- The system’s availability and stability during extended periods.

import http from 'k6/http'import { sleep } from 'k6'

export const options = { // Key configurations for Soak test in this section stages: [ { duration: '5m', target: 100 }, // traffic ramp-up from 1 to 100 users over 5 minutes. { duration: '8h', target: 100 }, // stay at 100 users for 8 hours!!! { duration: '5m', target: 0 }, // ramp-down to 0 users ],}

export default () => { const urlRes = http.get('https://test-api.k6.io') sleep(1) // MORE STEPS // Here you can have more steps or complex script // Step1 // Step2 // etc.}Spike Testing

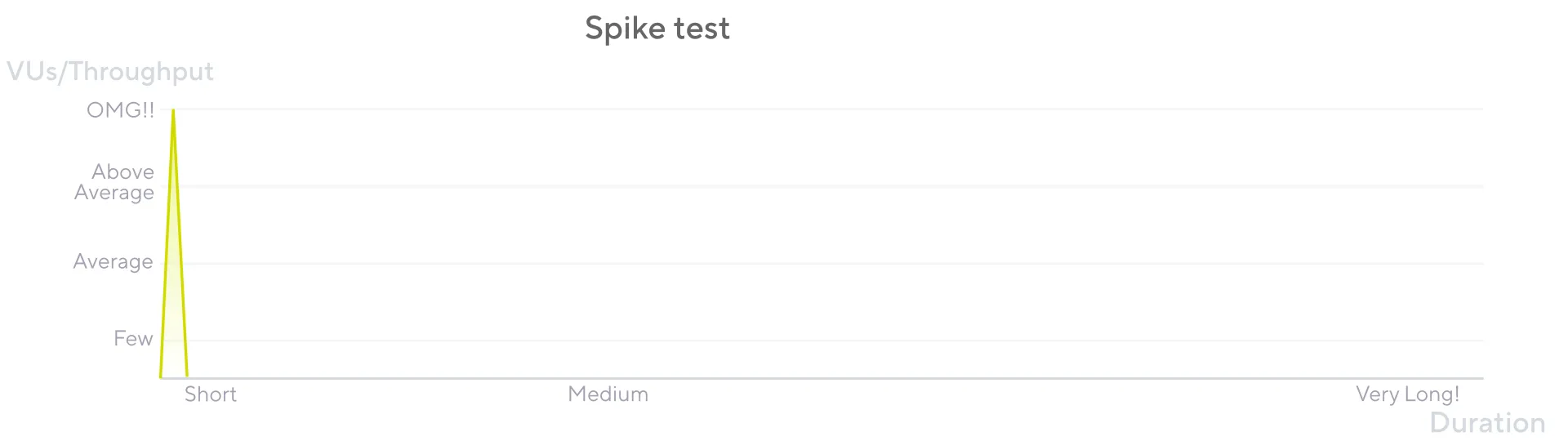

A spike test verifies whether the system survives and performs under sudden and massive rushes of utilization.

import http from 'k6/http'import { sleep } from 'k6'

export const options = { // Key configurations for spike in this section stages: [ { duration: '2m', target: 2000 }, // fast ramp-up to a high point // No plateau { duration: '1m', target: 0 }, // quick ramp-down to 0 users ],}

export default () => { const urlRes = http.get('https://test-api.k6.io') sleep(1) // MORE STEPS // Add only the processes that will be on high demand // Step1 // Step2 // etc.}