JavaScript Event Loop

Master the JavaScript event loop architecture across browser and Node.js environments, understanding task scheduling, microtasks, and performance optimization techniques.

TLDR

JavaScript Event Loop is the core concurrency mechanism that enables single-threaded JavaScript to handle asynchronous operations through a sophisticated task scheduling system with microtasks and macrotasks.

Core Architecture Principles

- Single-threaded Execution: JavaScript runs on one thread with a call stack and run-to-completion guarantee

- Event Loop: Central mechanism orchestrating asynchronous operations around the engine

- Two-tier Priority System: Microtasks (high priority) and macrotasks (lower priority) with strict execution order

- Host Environment Integration: Different implementations for browsers (UI-focused) and Node.js (I/O-focused)

Universal Priority System

- Synchronous Code: Executes immediately on the call stack

- Microtasks: Promise callbacks, queueMicrotask, MutationObserver (processed after each macrotask)

- Macrotasks: setTimeout, setInterval, I/O operations, user events (processed in event loop phases)

- Execution Order: Synchronous → nextTick → Microtasks → Macrotasks → Event Loop Phases

Browser Event Loop

- Rendering Integration: Integrated with 16.7ms frame budget for 60fps

- Task Source Prioritization: User interaction (high) → DOM manipulation (medium) → networking (medium) → timers (low)

- requestAnimationFrame: Executes before repaint for smooth animations

- Microtask Starvation: Potential issue where microtasks block macrotasks indefinitely

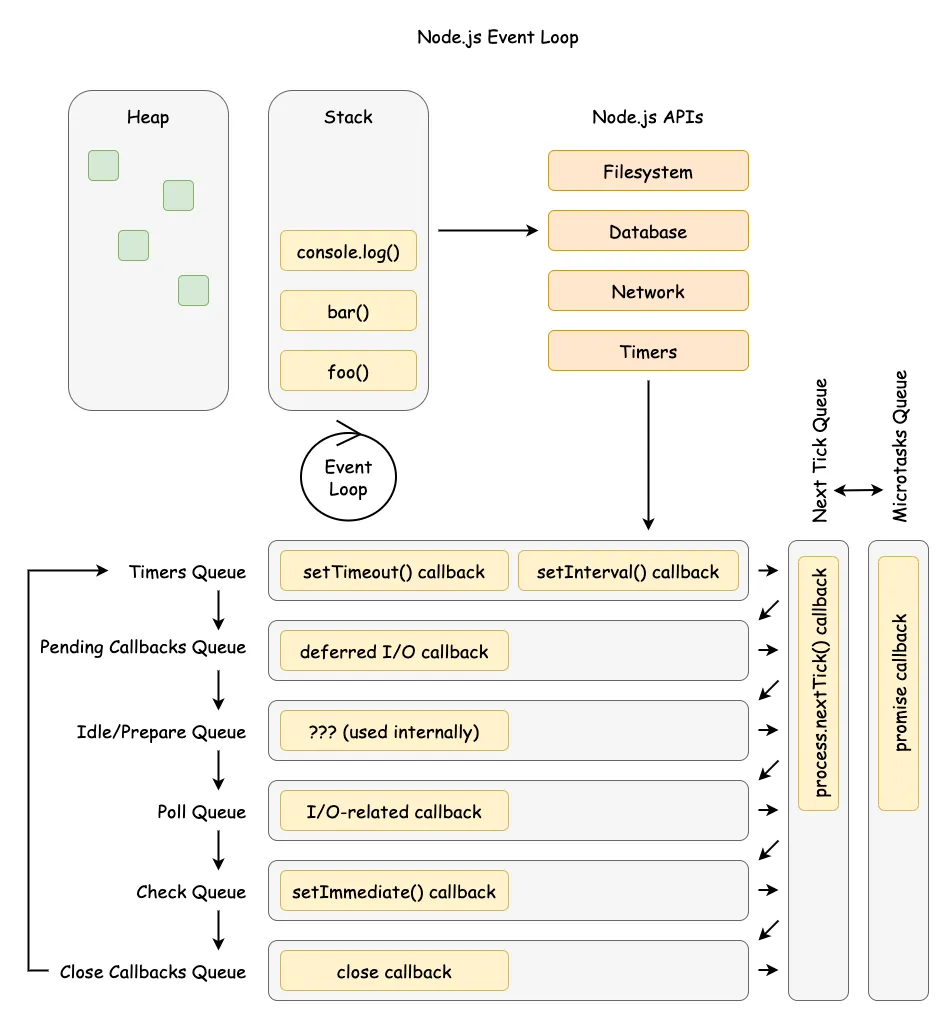

Node.js Event Loop (libuv)

- Phased Architecture: Six phases (timers → pending → idle → poll → check → close)

- Poll Phase Logic: Blocks for I/O or timers, exits early for setImmediate

- Thread Pool: CPU-intensive operations (fs, crypto, DNS) use worker threads

- Direct I/O: Network operations handled asynchronously on main thread

- Node.js-specific APIs: process.nextTick (highest priority), setImmediate (check phase)

Performance Optimization

- Keep Tasks Short: Avoid blocking the event loop with long synchronous operations

- Proper Scheduling: Choose microtasks vs macrotasks based on priority needs

- Avoid Starvation: Prevent microtask flooding that blocks macrotasks

- Environment-specific: Use requestAnimationFrame for animations, worker_threads for CPU-intensive tasks

True Parallelism

- Worker Threads: Independent event loops for CPU-bound tasks

- Memory Sharing: Structured clone, transferable objects, SharedArrayBuffer

- Communication: Message passing with explicit coordination

- Safety: Thread isolation prevents race conditions

Monitoring & Debugging

- Event Loop Lag: Measure time between event loop iterations

- Bottleneck Identification: CPU-bound vs I/O-bound vs thread pool issues

- Performance Tools: Event loop metrics, memory usage, CPU profiling

- Best Practices: Environment-aware scheduling, proper error handling, resource management

Table of Contents

- The Abstract Concurrency Model

- Universal Priority System: Tasks and Microtasks

- Browser Event Loop Architecture

- Node.js Event Loop: libuv Integration

- Node.js-Specific Scheduling

- True Parallelism: Worker Threads

- Best Practices and Performance Optimization

- Conclusion

- References

The Abstract Concurrency Model

JavaScript’s characterization as a “single-threaded, non-blocking, asynchronous, concurrent language” obscures the sophisticated interplay between the JavaScript engine and its host environment. The event loop is not a language feature but the central mechanism provided by the host to orchestrate asynchronous operations around the engine’s single-threaded execution.

Runtime Architecture

graph TB

subgraph "JavaScript Runtime"

subgraph "JavaScript Engine"

A["V8/SpiderMonkey/JavaScriptCore"]

B[ECMAScript Implementation]

C[Call Stack & Heap]

D[Garbage Collection]

end

subgraph "Host Environment"

E["Browser APIs / Node.js APIs"]

F[Event Loop]

G[I/O Operations]

H[Timer Management]

end

subgraph "Bridge Layer"

I[API Bindings]

J[Callback Queuing]

K[Event Delegation]

end

end

A --> B

B --> C

B --> D

E --> F

F --> G

F --> H

B --> I

I --> J

J --> K

K --> F

Core Execution Primitives

The ECMAScript specification defines three fundamental primitives:

- Call Stack: LIFO data structure tracking execution context

- Heap: Unstructured memory region for object allocation

- Run-to-Completion Guarantee: Functions execute without preemption

graph LR

subgraph "Execution Model"

A[Task Queue] --> B[Event Loop]

B --> C[Call Stack]

C --> D[Function Execution]

D --> E[Return/Complete]

E --> F[Stack Empty?]

F -->|Yes| G[Next Task]

F -->|No| D

G --> A

end

Specification Hierarchy

graph TD

A[ECMAScript 262] --> B[Abstract Agent Model]

B --> C[Jobs & Job Queues]

D[WHATWG HTML Standard] --> E[Browser Event Loop]

E --> F[Tasks & Microtasks]

E --> G[Rendering Pipeline]

H[Node.js/libuv] --> I[Phased Event Loop]

I --> J[I/O Optimization]

I --> K[Thread Pool]

C --> E

C --> I

Universal Priority System: Tasks and Microtasks

All modern JavaScript environments implement a two-tiered priority system governing asynchronous operation scheduling.

Queue Processing Model

graph TD

A[Event Loop Tick] --> B[Select Macrotask]

B --> C[Execute Macrotask]

C --> D[Call Stack Empty?]

D -->|No| C

D -->|Yes| E[Microtask Checkpoint]

E --> F[Process All Microtasks]

F --> G[Microtask Queue Empty?]

G -->|No| F

G -->|Yes| H[Next Phase]

H --> A

Priority Hierarchy

graph TD

subgraph "Execution Priority"

A[Synchronous Code] --> B[nextTick Queue]

B --> C[Microtask Queue]

C --> D[Macrotask Queue]

D --> E[Event Loop Phases]

end

subgraph "Macrotask Sources"

F[setTimeout/setInterval]

G[I/O Operations]

H[User Events]

I[Network Requests]

end

subgraph "Microtask Sources"

J[Promise callbacks]

K[queueMicrotask]

L[MutationObserver]

end

F --> D

G --> D

H --> D

I --> D

J --> C

K --> C

L --> C

Microtask Starvation Pattern

// Pathological microtask starvationfunction microtaskFlood() { Promise.resolve().then(microtaskFlood)}microtaskFlood()

// This macrotask will never executesetTimeout(() => { console.log("Starved macrotask")}, 1000)Browser Event Loop Architecture

The browser event loop is optimized for UI responsiveness, integrating directly with the rendering pipeline.

WHATWG Processing Model

graph TD

A[Event Loop Iteration] --> B[Select Task from Queue]

B --> C[Execute Task]

C --> D[Call Stack Empty?]

D -->|No| C

D -->|Yes| E[Microtask Checkpoint]

E --> F[Drain Microtask Queue]

F --> G[Update Rendering]

G --> H[Repaint Needed?]

H -->|Yes| I[Run rAF Callbacks]

I --> J[Style Recalculation]

J --> K[Layout/Reflow]

K --> L[Paint]

L --> M[Composite]

H -->|No| N[Idle Period]

M --> N

N --> A

Rendering Pipeline Integration

graph LR

subgraph "Frame Budget (16.7ms)"

A[JavaScript Execution] --> B[Style Calculation]

B --> C[Layout]

C --> D[Paint]

D --> E[Composite]

end

subgraph "requestAnimationFrame"

F[rAF Callbacks] --> G[Before Repaint]

end

subgraph "Timer Inaccuracy"

H[setTimeout Delay] --> I[Queuing Delay]

I --> J[Actual Execution]

end

Task Source Prioritization

graph TD

subgraph "Task Sources"

A[User Interaction] --> B[High Priority]

C[DOM Manipulation] --> D[Medium Priority]

E[Networking] --> F[Medium Priority]

G[Timers] --> H[Low Priority]

end

subgraph "Browser Implementation"

I[Task Queue Selection] --> J[Source-Based Priority]

J --> K[Responsive UI]

end

Node.js Event Loop: libuv Integration

Node.js implements a phased event loop architecture optimized for high-throughput I/O operations.

libuv Architecture

graph TB

subgraph "Node.js Runtime"

A[V8 Engine] --> B[JavaScript Execution]

C[libuv] --> D[Event Loop]

C --> E[Thread Pool]

C --> F[I/O Operations]

end

subgraph "OS Abstraction"

G[Linux: epoll] --> C

H[macOS: kqueue] --> C

I[Windows: IOCP] --> C

end

subgraph "Thread Pool"

J[File I/O] --> E

K[DNS Lookup] --> E

L[Crypto Operations] --> E

end

subgraph "Direct I/O"

M[Network Sockets] --> F

N[HTTP/HTTPS] --> F

end

Phased Event Loop Structure

graph TD

A[Event Loop Tick] --> B[timers]

B --> C[pending callbacks]

C --> D[idle, prepare]

D --> E[poll]

E --> F[check]

F --> G[close callbacks]

G --> A

subgraph "Phase Details"

H[setTimeout/setInterval] --> B

I[System Errors] --> C

J[I/O Callbacks] --> E

K[setImmediate] --> F

L[Close Events] --> G

end

Poll Phase Logic

graph TD

A[Enter Poll Phase] --> B{setImmediate callbacks?}

B -->|Yes| C[Don't Block]

B -->|No| D{Timers Expiring Soon?}

D -->|Yes| E[Wait for Timer]

D -->|No| F{Active I/O Operations?}

F -->|Yes| G[Wait for I/O]

F -->|No| H[Exit Poll]

C --> I[Proceed to Check]

E --> I

G --> I

H --> I

Thread Pool vs Direct I/O

graph LR

subgraph "Thread Pool Operations"

A[fs.readFile] --> B[Blocking I/O]

C[dns.lookup] --> B

D[crypto.pbkdf2] --> B

E[zlib.gzip] --> B

end

subgraph "Direct I/O Operations"

F[net.Socket] --> G[Non-blocking I/O]

H[http.get] --> G

I[WebSocket] --> G

end

B --> J[libuv Thread Pool]

G --> K[Event Loop Direct]

Node.js-Specific Scheduling

Node.js provides unique scheduling primitives with distinct priority levels.

Priority Hierarchy

graph TD

subgraph "Node.js Priority System"

A[Synchronous Code] --> B[process.nextTick]

B --> C[Microtasks]

C --> D[timers Phase]

D --> E[poll Phase]

E --> F[check Phase]

F --> G[close callbacks]

end

subgraph "Scheduling APIs"

H[process.nextTick] --> I[Highest Priority]

J[Promise.then] --> K[Microtask Level]

L[setTimeout] --> M[Timer Phase]

N[setImmediate] --> O[Check Phase]

end

nextTick vs setImmediate Execution

graph TD

A[I/O Callback] --> B[Poll Phase]

B --> C[Execute I/O Callback]

C --> D[process.nextTick Queue]

C --> E[setImmediate Queue]

D --> F[Drain nextTick]

F --> G[Drain Microtasks]

G --> H[Check Phase]

H --> I[Execute setImmediate]

I --> J[Close Callbacks]

J --> K[Next Tick]

setTimeout vs setImmediate Ordering

graph LR

subgraph "Within I/O Cycle"

A[I/O Callback] --> B[setImmediate First]

B --> C[setTimeout Second]

end

subgraph "Outside I/O Cycle"

D[Main Module] --> E[Non-deterministic]

E --> F[Performance Dependent]

end

True Parallelism: Worker Threads

Worker threads provide true parallelism by creating independent event loops.

Worker Architecture

graph TB

subgraph "Main Thread"

A[Main Event Loop] --> B[UI Thread]

C[postMessage] --> D[Message Channel]

end

subgraph "Worker Thread"

E[Worker Event Loop] --> F[Background Thread]

G[onmessage] --> H[Message Handler]

end

subgraph "Communication"

I[Structured Clone] --> J[Copy by Default]

K[Transferable Objects] --> L[Zero-Copy Transfer]

M[SharedArrayBuffer] --> N[Shared Memory]

end

D --> E

H --> C

I --> D

K --> D

M --> D

Memory Sharing Patterns

graph TD

subgraph "Communication Methods"

A[postMessage] --> B[Structured Clone]

C[Transferable Objects] --> D[Ownership Transfer]

E[SharedArrayBuffer] --> F[Shared Memory]

end

subgraph "Safety Mechanisms"

G[Thread Isolation] --> H[No Race Conditions]

I[Atomic Operations] --> J[Safe Coordination]

K[Message Passing] --> L[Explicit Communication]

end

Best Practices and Performance Optimization

Environment-Agnostic Principles

graph TD

A[Keep Tasks Short] --> B[Avoid Blocking]

C[Master Microtask/Macrotask Choice] --> D[Proper Scheduling]

E[Avoid Starvation] --> F[Healthy Event Loop]

subgraph "Anti-patterns"

G[Long Synchronous Code] --> H[UI Blocking]

I[Recursive Microtasks] --> J[Event Loop Starvation]

K[Blocking I/O] --> L[Poor Performance]

end

Browser-Specific Optimization

graph LR

subgraph "Animation Best Practices"

A[requestAnimationFrame] --> B[Smooth 60fps]

C[setTimeout Animation] --> D[Screen Tearing]

end

subgraph "Computation Offloading"

E[Web Workers] --> F[Background Processing]

G[Main Thread] --> H[UI Responsiveness]

end

Node.js-Specific Optimization

graph TD

subgraph "Scheduling Choices"

A[setImmediate] --> B[Post-I/O Execution]

C["setTimeout(0)"] --> D[Timer Phase]

E[process.nextTick] --> F[Critical Operations]

end

subgraph "Performance Tuning"

G[CPU-Bound Work] --> H[worker_threads]

I[I/O Bottleneck] --> J[Thread Pool Size]

K[Network I/O] --> L[Event Loop Capacity]

end

Performance Monitoring

graph LR

subgraph "Bottleneck Identification"

A[Event Loop Lag] --> B[CPU-Bound]

C[I/O Wait Time] --> D[Network/File I/O]

E[Thread Pool Queue] --> F[Blocking Operations]

end

subgraph "Monitoring Tools"

G[Event Loop Metrics] --> H[Lag Detection]

I[Memory Usage] --> J[Leak Detection]

K[CPU Profiling] --> L[Hot Paths]

end

Conclusion

The JavaScript event loop is not a monolithic entity but an abstract concurrency model with environment-specific implementations. Expert developers must understand both the universal principles (call stack, run-to-completion, microtask/macrotask hierarchy) and the divergent implementations (browser’s rendering-centric model vs Node.js’s I/O-centric phased architecture).

Key takeaways for expert-level development:

- Environment Awareness: Choose scheduling primitives based on the target environment

- Performance Profiling: Identify bottlenecks in the appropriate layer (event loop, thread pool, OS I/O)

- Parallelism Strategy: Use worker threads for CPU-intensive tasks while maintaining event loop responsiveness

- Scheduling Mastery: Understand when to use microtasks vs macrotasks for optimal performance

The unified mental model requires appreciating common foundations while recognizing environment-specific mechanics that dictate performance and behavior across the JavaScript ecosystem.

References

- The Node.js Event Loop Official Docs

- Libuv Design - The I/O Loop

- Node Interactive 2016 Talk - Everything You Need to Know About Node.js Event Loop - Bert Belder, IBM

- Node Interactive 2016 Talk Presentation

- A Deep Dive Into the Node js Event Loop - Tyler Hawkins

- A Deep Dive Into the Node js Event Loop - Code & Slides

- Node’s Event Loop From the Inside Out by Sam Roberts, IBM

- WHATWG HTML Living Standard - Event Loops

- ECMAScript 2024 - Jobs and Job Queues